When using the Concurrent Low Pause or Concurrent Mark Sweep Garbage collector with a Sun Hotspot JVM, you may observe the following "concurrent mode failures" errors in your GC logs or stderr:

(concurrent mode failure): 1404669K->602298K(1482752K), 35.9660286 secs] 1988728K->602298K(2096576K), 46.2083962 secs]

A concurrent mode failure indicates that a concurrent garbage collection of the tenured generation did not complete before the tenured generation filled up.

Impact of a concurrent mode failure: When a 1.4 JVM encounters a concurrent mode failure, it will trigger the default stop-the-world garbage collector in the tenured generation, resulting in a relatively long pause. In the example error above, note that the concurrent mode failure results in a GC (default stop-the-world) of around 46 seconds.

When does it occur?: A concurrent mode failure typically occurs with the following scenarios:

(1) Heavy load: Heavy load on the application running in the JVM, causing a huge promotion from the young to the tenured generation is typically what causes concurrent mode failures.

(2) Young generation guarantee failure: With JVM 1.4, a requirement for successful promotions from young to tenured generation is to have a contiguous block of free space equal to the size of the young generation, in the tenured generation to cater to the worst case requirement of having to promote all objects. Even if there is required space in the tenured generation, but it is not contiguous (i.e. it is fragmented), promotion failures and concurrent mode failures could occur. Fragmentation problems typically manifest themselves after a long period of continuous use. So, your application and JVM may be running fine for a while and suddenly (when the tenured generation is too fragmented) exhibit these problems.

How to fix it?: To fix these concurrent mode failures with JVM 1.4, typical solutions are:

(1) Increase the size of the JVM heap and consequently the size of the old generation.

(2) Tune the JVM depending on your application profile.

(3) Scale your platform to distribute load and subject each JVM to a smaller load.

JVM Tuning: My colleagues and I observed that our Hotspot 1.4.2_11 JVMs running WebLogic 8.1 SP6 threw several concurrent mode failures during peak load, at specific times of the day. The JVM heap for each WebLogic managed server was 2 GB which was already quite big (cannot increase much more anyway, as were using a 32-bit 1.4.2 JVM on Solaris 9). So, we decided to tune the JVM. Here are details of our JVM tuning to significantly reduce the number of concurrent mode failures:

(1) JVM Options before tuning: Given below, are the JVM parameters used when the problem occurred (WLjvm output):

************************************************************************************************** JVM DETAILS FOR WEBLOGIC SERVER m1 (PID = 9999) ************************************************************************************************** VERSION & BUILD =============== java version "1.4.2_11" Java(TM) 2 Runtime Environment, Standard Edition (build 1.4.2_11-b06) Java HotSpot(TM) Client VM (build 1.4.2_11-b06, mixed mode) OPTIONS ======= -Dbt.weblogic.RootDirectory=/software/weblogic -Dbt.weblogic.RootDirectory=/software/weblogic -Dweblogic.Name=m1 -Dweblogic.ProductionModeEnabled=true -Dweblogic.management.server=http://localhost:50000 -Dweblogic.system.BootIdentityFile=/software/weblogic/boot.properties -XX:+CMSParallelRemarkEnabled -XX:+JavaMonitorsInStackTrace -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+UseConcMarkSweepGC -XX:+UseLWPSynchronization -XX:+UseParNewGC -XX:+UsePerfData -XX:MaxNewSize=600m -XX:MaxPermSize=128m -XX:NewSize=600m -Xloggc:/software/weblogic/logs/GC/m1_200909012300.log -Xms2048m -Xmx2048m -Xoss2m -Xss2m -server ************************************************************************************************** |

(2) GC Analysis: I used PrintGCStats to analyze GC logs. It is also important to determine the CMSInitiatingOccupancyFraction (the % occupancy of the tenured generation at which a CMS GC is triggered). As the JVM options above do not set this parameter, the JVM calculates its own CMSInitiatingOccupancyFraction at runtime. The default for JVM 1.4.2 is 68%, but do NOT assume that the JVM always uses the default if you do not specify this parameter. Instead, analyze the GC logs to understand when the JVM triggers a CMS GC. The script CMSGCStats.ksh (formerly CMSGCTrigger.ksh) may be used for this purpose, along with the provision of other metrics. By using PrintGCStats and CMSGCTrigger.ksh, we determined that a lot of objects were promoted to the tenured generation and CMS GC was mostly triggered at 50% occupancy of the tenured generation with some occurrences between 60% to 75% also.

(3) Requirements Analysis: Our application mostly created short-lived objects and very low latency was not critical due to the mostly asynchronous services provided by the application. Hence, our goal was to retain most of the objects for as long as possible in the young generation, to facilitate their garbage collection in the Young generation, thereby promoting fewer objects to the tenured generation and reducing the probability of filling up the tenured generation during a CMS GC (causing concurrent mode failures).

(4) Implementation:

In order to garbage collect objects in the Young Generation and decrease the number of objects being promoted to the tenured generation, we tuned the following parameters:

| JVM Parameter | Why selected for tuning |

| -XX:NewSize, -XX:MaxNewSize | These parameters control the size of the Young generation. Increasing the size of the Young generation increases probability of more garbage collection in the Young Generation. Rule of thumb is to size the Young generation to 3/8 of the maximum heap size. |

| -XX:MaxTenuringThreshold | This parameter determines how long the objects in the Young generation may age (number of times they are copied between survivor spaces before being promoted to the Tenured generation). The default is 31. Increasing the value of this parameter increases probability of more garbage collection in the Young Generation. Refer to this article for more details. |

| -XX:TargetSurvivorRatio | This parameter sets the desired percentage of the survivor spaces which must be used before objects are promoted to the Tenured generation. The default is 50. Increasing the value of this parameter increases probability of more garbage collection in the Young Generation. |

| -XX:SurvivorRatio | This parameter is the ratio of the size of Eden to the size of each survivor space (SR = EdenSize/SurvivorSpaceSize) and hence it can be used to set the size of a survivor space. Decreasing the value of this parameter ensures larger survivor spaces and increases probability of more garbage collection in the Young Generation |

In order to override the JVM’s choice of when to trigger a CMS GC in the tenured generation, we tuned the following parameters:

| JVM Parameter | Why selected for tuning |

| -XX:CMSInitiatingOccupancyFraction | This parameter sets the threshold percentage occupancy of the old generation at which the CMS GC is triggered. The default for JVM 1.4.2 is 68, but the JVM deviates from this default at runtime. So, setting this parameter explicitly tells the JVM when to trigger CMS GC in the tenured generation. |

| -XX:+UseCMSInitiatingOccupancyOnly | This parameter tells the JVM to use only the value defined by -XX:CMSInitiatingOccupancyFraction , rather than try to also calculate the value at runtime. |

(5) Results: Based on 5 iterations of testing with different sets of the parameters mentioned above, we obtained best results with the following JVM parameters:

************************************************************************************************** JVM DETAILS FOR WEBLOGIC SERVER m1 (PID = 8888) ************************************************************************************************** VERSION & BUILD =============== java version "1.4.2_11" Java(TM) 2 Runtime Environment, Standard Edition (build 1.4.2_11-b06) Java HotSpot(TM) Client VM (build 1.4.2_11-b06, mixed mode) OPTIONS ======= -Dbt.weblogic.RootDirectory=/software/weblogic -Dbt.weblogic.RootDirectory=/software/weblogic -Dweblogic.Name=m1 -Dweblogic.ProductionModeEnabled=true -Dweblogic.management.server=http://localhost:50000 -Dweblogic.system.BootIdentityFile=/software/weblogic/boot.properties -XX:+CMSParallelRemarkEnabled -XX:+JavaMonitorsInStackTrace -XX:+PrintGCDetails -XX:+PrintGCTimeStamps -XX:+UseConcMarkSweepGC -XX:+UseLWPSynchronization -XX:+UseParNewGC -XX:+UsePerfData -XX:MaxPermSize=128m -XX:MaxNewSize=768m -XX:NewSize=768m -XX:MaxTenuringThreshold=8 -XX:TargetSurvivorRatio=90 -XX:SurvivorRatio=4 -XX:CMSInitiatingOccupancyFraction=55 -XX:+UseCMSInitiatingOccupancyOnly -Xloggc:/software/weblogic/logs/GC/m1_200909022300.log -Xms2048m -Xmx2048m -Xoss2m -Xss2m -server ************************************************************************************************** |

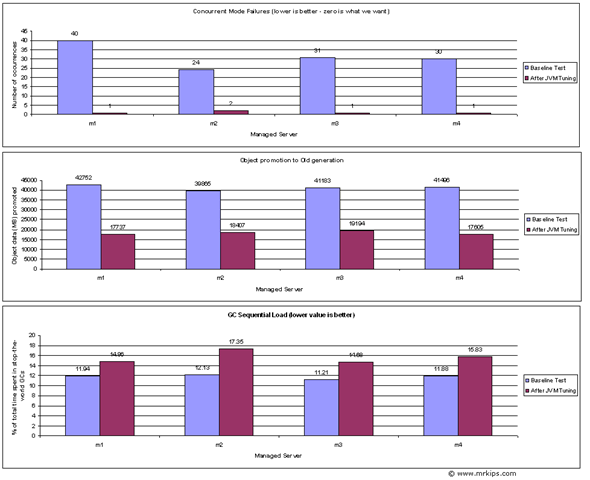

Some graphs of parameters before (baseline) and after JVM tuning for 4 WebLogic managed servers are given below:

The above graphs indicate the following:

(1) The number of occurrences of "concurrent mode failures" significantly decreased after JVM tuning, although these errors weren’t eliminated.

(2) The amount of objects (MB) promoted from the young to the tenured generation significantly decreased after JVM Tuning, thereby indication more garbage collection in the young generation.

(3) The GC Sequential load (% of total time spent in GC with all application threads suspended) increased after JVM Tuning. Although this is not desired, given that our application did not require very low latency, we were willing to make a compromise and accept the increase in GC Sequential load. As a matter of fact, when our JVM changes were rolled out onto Production, the GC Sequential load was only around 1%. We were just being cautious and testing our changes on a test environment with very high loads.

NOTE: The tuning exercise described above worked well for us. However, depending on your scenario, you may have to do further tuning. Also, there’s only so much you can benefit by tuning a JVM and you must give due consideration to scaling the platform and/or application design. In the example above, although JVM tuning helped, the load on our platform was too high and we had to scale our platform by adding more WebLogic managed servers (JVMs). Problems such as "concurrent mode failures" are typically caused by high loads when the CMS GC isn’t able to keep up with the rate of allocation of objects in the tenured generation.

REFERENCES:

(1) When the Sum of the parts – Jon Masamitsu

(2) What the Heck’s a Concurrent Mode? – Jon Masamitsu

Pingback:WebLogic – IP Multicast : A primer :Mr.KIPS

excellent article!

one question, what kind of gc occurs in the young region, does it stop the world?

Did you perform the tuning a test env or production , because this results would probably have come after trial and error method.

Hi,

Even the 1-2 concurrent mode failure is unacceptable (stop-the-world collection for a minute).

I know this post is pretty old, but in these cases you might try out

the incremental CMS, so the GC will be executing (almost) all the time in the background. This should work fine if you have 1-2 CPUs in your system (GC is not superseeded by app threads)

… and use more recent JRE version (1.6 G1 collector?)

@Sandor: Yes, you must try to avoid even a single occurrence of concurrent mode failure. Using iCMS with JRE 5 does help, but you should ensure you have sufficient processor time available. G1 replaced CMS from JRE 7 onwards and that’s supposed to be preferable.

what about promotion failed errors, this will also lead to a large pause time.

the error we get is

459051.025: [GC 459051.025: [ParNew (promotion failed): 706032K->706032K(707840K), 0.3068920 secs]459051.332: [CMS459052.853: [CMS-concurrent-sweep: 5.247/5.822 secs]

: 1245481K->1281925K(1572864K), 22.4610601 secs] 1908852K->1281925K(2280704K), 22.7691821 secs]

Whether promotion failed error same as concurrent mode failures?