Starting with Windows Server 2016, Microsoft introduced a new type of quorum witness for a Windows Server Failover Cluster (WSFC). This quorum witness leverages Microsoft Azure and is called a Cloud Witness. This type of quorum witness is applicable to multi-site stretched WSFCs.

Cloud Witness Requirements

- An Azure Storage account : Storage Account Name and Access Key.

- Internet or ExpressRoute connectivity from all the database servers to https://<storageaccount>.blob.core.windows.net

Azure Storage Account Recommendations

Here are some recommendations for Azure Storage accounts for use by Cloud Witnesses:

- Enforce access control via IAM and Azure Storage Firewall to restrict access to users and networks.

- Enforce TLS v1.2 (or the latest TLS protocol) for access to the Storage Account.

- Add a ‘delete’ resource lock for the storage account, given that it’s used by a core component (Cloud Witness) of your WSFCs.

- Store Azure Storage Account Access keys in a secure system on-premises or in Azure Key Vault for use by teams building WSFCs.

Cloud Witness Deployment

Refer the Microsoft documentation for an overview of the Cloud Witness and its deployment. The salient features are given below:

- When a cloud witness is configured for a cluster using an Azure storage account for the first time, a container called msft-cloud-witness is created within the storage account. The cluster then creates a unique file named with the clusterid.

- Multiple WSFCs may share the same Azure Storage Account with each WSFC operating on a unique file within the msft-cloud-witness container.

WSFC+Cloud Witness: Resilience Tests

I performed resilience tests with an on-premises WSFC (Windows Server 2019) and Cloud Witness and the details are provided below:

WSFC PARAMETERS

- With Powershell as Administrator, execute the following commands on one of the cluster nodes:

# Enable Site Fault Tolerance (Get-Cluster).AutoAssignNodeSite=1 # Set Heartbeat frequency for nodes in the same subnet (Get-Cluster).SameSubnetDelay=1000 # Set minimum number of missed heartbeats between nodes in the same subnet before recovery action (Get-Cluster).SameSubnetThreshold=10 # Set Heartbeat frequency for nodes in the different sites (Get-Cluster).CrossSiteDelay=1000 # Set minimum number of missed heartbeats between nodes in different sites before recovery action (Get-Cluster).CrossSiteThreshold=10 |

NOTE:

- The heartbeat parameters above were suitable for my network infrastructure spanning 2 sites and availability requirements, but may be considered too aggressive for some environments.

- All other timeout parameters (Lease Time, HealthCheck Time, etc.) were kept with default values.

TEST METHOD:

- The clustered role on the WSFC being accessed by the test client during resilience tests is a SQL AG (AG + AG Listener).

- The test client (shell scripts) use sqlcmd with the latest MSSQL ODBC driver that supports AGs (e.g. MultiSubnetFailover, ApplicationIntent).

- Node failures were simulated by powering off the Virtual Machines (not graceful – similar to pressing the power button on your laptop to power it down).

- Network failures were simulated with firewall rules denying relevant traffic.

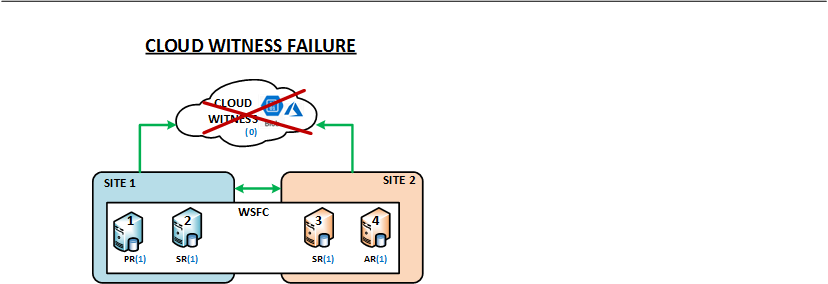

- The Cloud Witness failure was simulated by deleting the relevant Azure storage account.

NOTE: The client (simple scripts) does not simulate real-world applications, but offers some insight into the impact on clients.

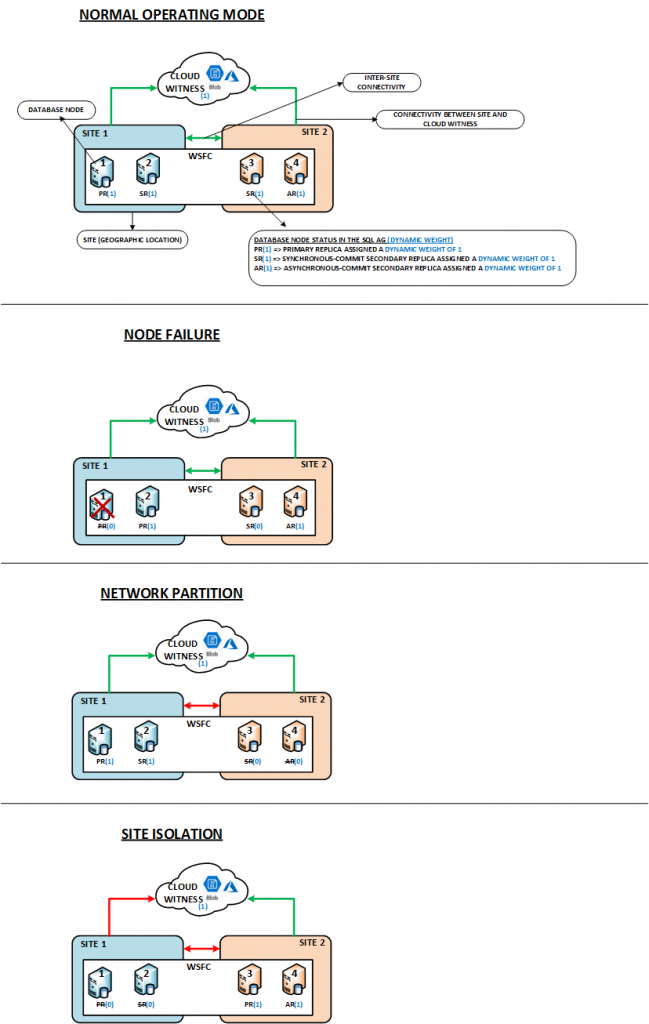

The images below describe the different types of resilience tests performed in the IA LAB.

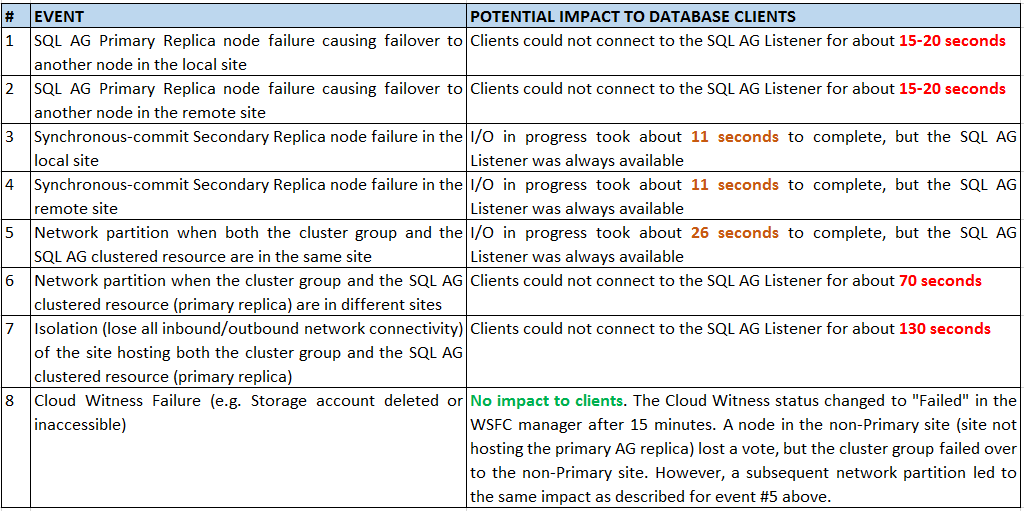

The following table summarizes the observations based on several resilience tests pertaining to the types of tests described above.

WSFC GUIDELINES

- Enable Site Fault tolerance either by using (Get-Cluster).AutoAssignNodeSite=1 or manually configuring sites and node-site affinity with the New-ClusterFaultDomain and Set-ClusterFaultDomain powershell cmdlets.

- Do not set “Preferred owners”, unless absolutely required and you know what you’re doing.

- Do not allow the cluster group and SQL AG clustered resource to remain in different sites. There wouldn’t be an issue with this under normal operations. However, a network partition could cause the SQL AG clustered resource to failover to the site hosting the cluster group.