In Oracle WebLogic (formerly BEA WebLogic) versions prior to version 10.0, WebLogic Servers relied on IP multicast to ensure cluster membership (versions 10.0 and later provide the alternative of Unicast which is preferred over Multicast). This article will pertain to IP multicast used by WebLogic.

What is IP multicast?

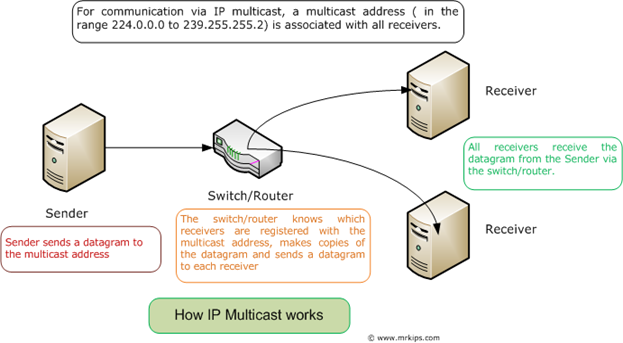

IP multicast is a technology used to broadcast data (or datagrams) across a network using IP. For IP multicasting, certain special IP addresses called multicast addresses are defined. According to the Internet Assigned Numbers Authority (IANA), its RFC3171 guidelines specify that addresses 224.0.0.0 to 239.255.255.255 are designated as multicast addresses. A multicast address is associated with a group of receivers. When a sender wishes to send a datagram to a group of receivers using IP multicast, it will send the datagram to the multicast address/port associated with that group of receivers. When routers or switches on the network receive the datagram, they know which servers (receivers) are associated with the multicast address (using IGMP) and so they make copies of the datagram and send a copy to every registered receiver. This is illustrated in the figure below:

Why does WebLogic use IP multicast?

A WebLogic Cluster is a group of WebLogic servers which provides similar services, with resilience (if a server crashes and exits the cluster, it can rejoin the cluster later), high availability (if a server in the cluster crashes, other servers in the cluster can continue the provision of services) and load balancing (the load on all servers in a cluster can be uniformly distributed) for an application deployed on a WebLogic cluster. WebLogic makes these beneficial clustering features possible by using IP Multicast for the following:

(1) Cluster heartbeats: All servers in a WebLogic cluster must always know which servers are part of the cluster. To make this possible, each server in the cluster uses IP multicast to broadcast regular "heartbeat" messages that advertise its availability.

(2) Cluster-wide JNDI updates: Each WebLogic Server in a cluster uses IP multicast to announce the availability of clustered objects that are deployed or removed locally.

How does WebLogic use IP multicast?

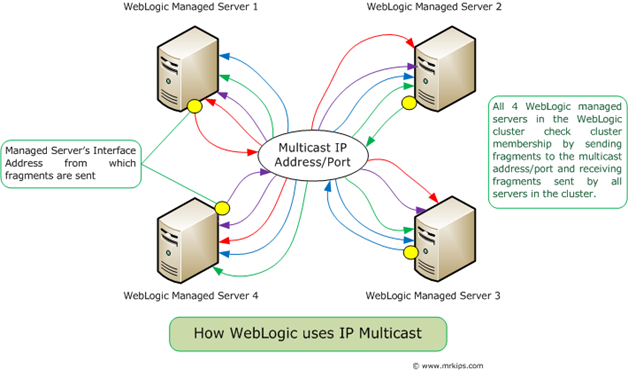

All servers in a WebLogic Cluster send out multicast fragments (heartbeat messages) from their interface addresses to the multicast IP address and port configured for the WebLogic Cluster. All servers in the cluster are registered with the multicast address and port and so every server in the cluster receives fragments from all other servers in the cluster as well as the fragment it sent out. So, since every server in the cluster sends out fragments every 10 seconds, based on the fragments it receives, it can determine which servers are still part of the cluster. If a server (say Server A) does not receive a fragment from another server in the cluster within 30 seconds (3 multicast heartbeat failures), then it will remove that server from its cluster membership list. When fragments from the removed server start arriving at Server A, then Server A will add the removed server back to its cluster membership list. This way, every server in a WebLogic cluster maintains its own cluster membership list. Regarding cluster-wide JNDI updates, each server instance in the cluster monitors these announcements and updates its local JNDI tree to reflect current deployments of clustered objects.

Note: Clustered server instances also monitor IP sockets as a more immediate method of determining when a server instance has failed.

The figure below illustrates how a WebLogic cluster uses IP multicast.

How do you configure and test multicast for a WebLogic Cluster?

Configuring IP Multicast for a WebLogic Cluster is simple. The steps required are given below:

STEP 1: If your WebLogic cluster is part of a network containing other clusters, obtain a multicast address and port for it, from your Network Admins. Understandably, a multicast address and port combination is unique for every WebLogic cluster. Several WebLogic clusters may share the same multicast address if and only if they use different multicast ports. Typically, in organizations, network admins allocate multicast addresses and ports for various projects to ensure there are no conflicts across the network. By default, WebLogic uses a multicast address of 237.0.0.1 and the listen port of the Administration server as the multicast port.

STEP 2: Having obtained a multicast address and port for your WebLogic cluster, you must test them before starting your WebLogic cluster to ensure that there are no network glitches and conflicts with other WebLogic clusters. You may do so with the MulticastTest utility provided with the WebLogic installation (part of weblogic.jar). An example test for a cluster containing 2 WebLogic servers on UNIX hosts and using multicast address/port of 237.0.0.1/30000 is given below:

# Command to run on both server hosts (any one of the following within the WebLogic domain directory) to set the WebLogic domain environment

. ./setDomainEnv.sh

. ./setEnv.sh

# Command to run on server 1 (within any directory)

${JAVA_HOME}/bin/java utils.MulticastTest -N server1 -A 237.0.0.1 -P 30000

# Command to run on server 2 (within any directory)

${JAVA_HOME}/bin/java utils.MulticastTest -N server2 -A 237.0.0.1 -P 30000

# NOTE: Both java commands must be run on both WebLogic server hosts concurrently.

View screenshots of the tests executed (on Windows Vista) when the WebLogic cluster was running (conflicts between test and running cluster outlined in red) and when the WebLogic cluster was stopped, by clicking on the images below:

Note: On Vinny Carpenter’s blog, he mentions a problem when using the utils.MulticastTest utility bundled with WebLogic Server 8.1 SP4. Well, I have never faced any issues with the utils.MulticastTest utility, but I am not sure if I’ve used it with WLS 8.1 SP4.

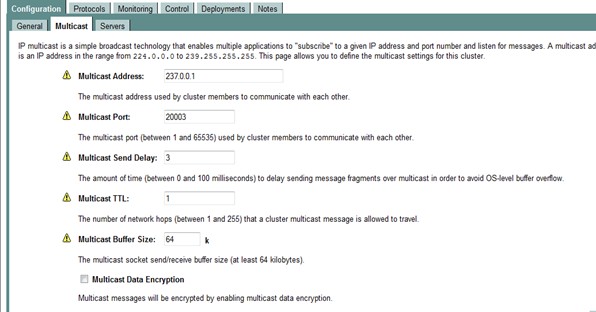

STEP 3: After successfully testing the multicast address and port, you may use the WebLogic Administration Console to configure multicast for the cluster. Descriptions of various multicast parameters are available on the console itself. The three most important parameters are (1) Multicast Address, (2) Multicast Port and (3) Interface Address. The Interface Address may be left blank if the WebLogic servers use their hosts’ default interface. On multi-NIC machines or in WebLogic clusters with Network channels, you may have to configure an Interface Address. Given below is a screenshot from a WLS 8.1 SP6 Administration Console indicating the various multicast parameters that may be configured for a cluster. Note that the interface address is on a different screen as it is associated with each server in the cluster, rather than the cluster itself.

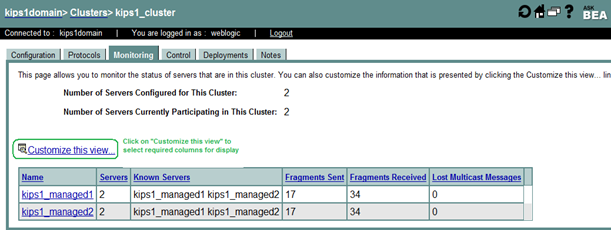

After configuring Multicast for a WebLogic cluster, you can monitor the health of the cluster and exchange of multicast fragments among the servers in the cluster by using the WebLogic Administration console. A screenshot of such a monitoring screen with WLS 8.1 SP6 is given below:

Note that the screenshot above indicates that:

(1) All servers are participating in the cluster ("Servers" column).

(2) Every server in the cluster is aware of every other server in the cluster. The "Known Servers" column is especially useful for large clusters to know exactly which servers are not participating in the cluster.

(3) The total number of fragments received by each server (34) is equal to the sum of all the fragments sent by all the servers in the cluster (17 + 17). Note that the "Fragments Sent" and "Fragments Received" columns on the console need not always indicate a correct relationship even if multicast works fine. That’s because these stats on the console are reset to 0 when servers are restarted.

Troubleshooting WebLogic’s Multicast configuration

When you encounter a problem with WebLogic multicast (or any problem for that matter), it is important to confirm the problem by executing as many tests as possible and gather as much data as possible when the problem occurs. For WebLogic multicast, you may confirm the problem by using the MulticastTest utility or checking the Administration console as described above. To troubleshoot WebLogic multicast, refer to the Oracle documentation. Also, check the section below to determine if the problem you’ve encountered is similar to one of the problems described, to provide you with a quick resolution.

WebLogic Multicast eureka!

Given below are WebLogic multicast problems which I’ve encountered and investigated, along with solutions that worked:

PROBLEM 1:

SYMPTOMS: All WebLogic servers could not see any other server in the cluster. Tests using the MulticastTest utility failed indicating that all servers could only receive the multicast fragments which they sent out.

ANALYSIS: The MulticastTest utility was tried with the correct multicast address, multicast port and interface address. No conflict with any other cluster was observed, but no messages were received from other servers. Assuming that all servers in the cluster are not hung, the symptoms indicate a problem with the underlying network or the multicast configuration on the network.

SOLUTIONS:

Solution 1: The Network Admin just gave us another multicast address/port pair and multicast tests worked. The multicast address/port pair which failed was not registered correctly on the network.

Solution 2: The Network Admin informed us that more than one switch was used on the cluster network and this configuration did not ensure that multicast fragments sent by one server in the cluster were copied and transmitted to other servers in the cluster. Refer to this CISCO document for details regarding this problem and its solutions. As a tactical solution, the Network Admin configured static multicast MAC entries on the switches (Solution 4 in the CISCO document). This tactical solution requires the Network Admin to maintain those static entries, but since there weren’t too many WebLogic clusters using multicast on the network, this solution was chosen.

Solution 3: The two managed servers in a cluster were in geographically separated data centres and several hops across the network were required for the servers to receive each other’s multicast fragments. Increasing the multicast TTL solved this problem and both the MulticastTest utility and the WebLogic servers successfully multicasted.

PROBLEM 2:

SYMPTOMS: The following errors were seen in the WebLogic managed server logs and the managed servers did not start.

Exception:weblogic.server.ServerLifecycleException: Failed to listen on multicast address

weblogic.server.ServerLifecycleException: Failed to listen on multicast address

at weblogic.cluster.ClusterCommunicationService.initialize()V

(ClusterCommunicationService.java:48)

at weblogic.t3.srvr.T3Srvr.initializeHere()V(T3Srvr.java:923)

at weblogic.t3.srvr.T3Srvr.initialize()V(T3Srvr.java:669)

at weblogic.t3.srvr.T3Srvr.run([Ljava/lang/String;)I(T3Srvr.java:343)

at weblogic.Server.main([Ljava/lang/String;)V(Server.java:32)

Caused by: java.net.BindException: Cannot assign requested address

at jrockit.net.SocketNativeIO.setMulticastAddress(ILjava/net/InetAddress;)V(Unknown Source)

at jrockit.net.SocketNativeIO.setMulticastAddress(Ljava/io/FileDescriptor;Ljava/net/InetAddress;)V(Unknown Source)

.

.

.

ANALYSIS: The errors occured irrespective of whichever multicast address/pair was used. The error indicates that the WebLogic server could not bind to an address to send datagrams to the multicast address. i.e. it could not bind to its Interface Address

SOLUTION: The WebLogic server host was a multi-NIC machine and another interface had to be specified for communication with the multicast address/port. Specifying the correct interface address solved the problem.

PROBLEM 3:

SYMPTOMS: The following errors were seen in the WebLogic managed server logs. The managed servers were running, but clustering features (like JNDI replication) were not working.

<May 20, 2008 4:00:58 AM BST> <Error> <Cluster> <kips1host> <kips1_managed1> <[ACTIVE] ExecuteThread: '1' for queue: 'weblogic.kernel.Default (self-tuning)'> <<WLS Kernel>> <> <> <1211252458100> <BEA-000170> <Server kips1_managed1 did not receive the multicast packets that were sent by itself> <May 20, 2008 4:00:58 AM BST> <Critical> <Health> <kips1host> <kips1_managed1> <[ACTIVE] ExecuteThread: '2' for queue: 'weblogic.kernel.Default (self-tuning)'> <<WLS Kernel>> <> <> <1211252458100> <BEA-310006> <Critical Subsystem Cluster has failed. Setting server state to FAILED. Reason: Unable to receive self generated multicast messages>

ANALYSIS: The errors above indicate that the WebLogic server kips_managed1 could not receive its own multicast fragments from the multicast address/port. Probably, the server’s multicast fragments did not reach the multicast address/port in the first place and this points to an issue with the configuration of the interface address or route from interface address to multicast address/port or multicast address/port (most likely the interface address or route as if the multicast address/port were wrong, the server would not have received multicast fragments from any other server, as in PROBLEM 1).

SOLUTION: The WebLogic server kips_managed1 used -Dhttps.nonProxyHosts and -Dhttp.nonProxyHosts JVM parameters in its server startup command and these parameter values did not contain the name of the host which hosted kips_managed1. After including the relevant hostname in these parameter values, the errors stopped occurring. I am not sure how these HTTP proxy parameters affected self-generated multicast messages (will try to investigate this).

PROBLEM 4:

SYMPTOMS: All WebLogic servers were often (not always) part of a cluster and intermittently, servers in the cluster were removed and added and the LostMulticastMessageCount was increasing for some servers in the cluster. However, tests using the MulticastTest utility (when the cluster was stopped) were successful.

ANALYSIS: The problem occurred intermittently when the WebLogic servers were running, but never occurred when using the MulticastTest utility. This indicates that the underlying IP multicast works fine and something is preventing the servers in the cluster from IP multicasting properly. Further analysis revealed that the servers had issues with JVM Garbage Collection with long stop-the-world pauses (> 30 secs) during which the JVM did absolutely nothing else other than garbage collection. Also, the times of occurrences of these long GC pauses correlated with the times of increases in LostMulticastMessageCount and removal of servers from the cluster.

SOLUTION: The JVMs hosting the WebLogic servers were tuned to minimize stop-the-world GC pauses to allow the servers to multicast properly. For the specific GC problem I encountered, you may refer to the tuning details here.

Reference: Oracle documentation

NOTE:

Your rating of this post will be much appreciated. Also, feel free to leave comments (especially if you have constructive negative feedback).

The Information in this Site was very helpful.

Thanks a lot,

Vijaya

this post is really very helpful and mainly understandable to newbies. Thanks a lot. did you write anywhere detaily about “unicast”

Thanks

santhosh

this post is really good and it cleared lot of doubts on multicast.

thanks alot..

This is really very helpful for weblogic adminstratators.

Nice post. Very helpful and easily understandable.

Very useful information. Is MultiCast Monitor tool useful at all

Good Info,but can you please let us know about the investigation and root cause for the Problem 3.

Ryan, I didn’t get a chance to investigate that problem and have long left that project. Thinking about it now, perhaps those errors were thrown when different proxies were used as different network routes and switches would have been used and those switches may not have been configured for the cluster’s multicast traffic….but I’m not sure about this hypothesis. However, as per my observation, when those proxy system properties were changed, multicast started working fine again and re-introducing the old properties caused multicast to fail again. Sorry, I’m not certain about the root cause of this problem.

this post is really helpful

This was really a good article.

Helpful post.. tnx

Awesome post..Thanks a ton.. i have a issue with WLS 8 the App servers are Showing Shown as ‘Unknown’ while really they are processing requests i suspect it to be because of multoicast storm as we have 10 managed servers in the cluster thus sharing the mullticast address.. and multicast buffer is also less 64kb….. can you tell me how i can confirm that the issue is caused due to the said issue i mean what stuff willbe dere in the logs(as i am dealing with prod i need a confirmation before i proceed with increase in multicast buffer)

Ankit, you’re welcome. Well, I have worked on weblogic clusters with 12 managed servers and haven’t experienced multicast issues, but that depends on how promptly the servers process the multicast messages and the health of the network.

I recommend the following:

(1) If you do get a maintenance window, then use the multicast test utility for all managed servers for at least 10 minutes and check if every server can receive packets from every other server as well as its own packets.

(2) In order to get more information in the server logs, you could enable the following debug flags:

Set these flags from the command line during server startup by adding the following options:

* -Dweblogic.debug.DebugCluster=true

* -Dweblogic.debug.DebugClusterHeartBeats=true

* -Dweblogic.debug.DebugClusterFragments=true

All the best!

Great Article. Thanks for sharing.

Best explanation …. No pits to fall.

Hi thanks for ur valuable information. it is very helpful to every one.

thanks..article really was useful.. i understood basics of weblogic cluster multicasting 🙂

A++ article, making hard stuff look easy.